Many of our studies involve monitoring and evaluating program implementation to learn more about how programs are delivered and the degree to which they are delivered as intended. Examining program implementation helps program developers, stakeholders, and evaluators better understand how certain factors (e.g., adherence, dosage, quality of delivery, participant engagement, modifications) might influence a program’s intended outcomes.

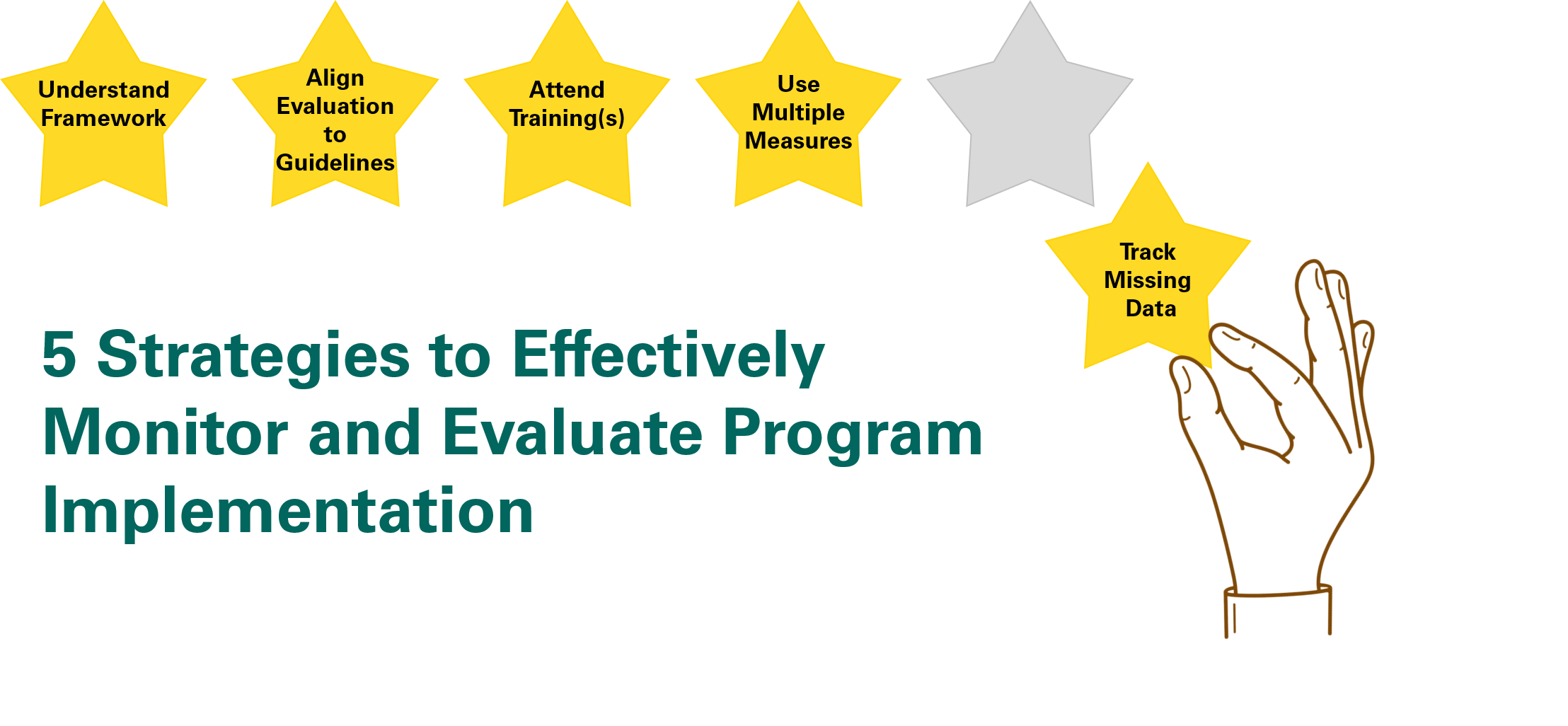

At Magnolia Consulting, we find the following five strategies help us to effectively monitor and evaluate implementation:

-

- Understand the theory behind the program. We recommend reviewing the program’s logic model to ensure a clear understanding of the theory behind the program. A logic model provides a visual description of how critical program components are expected to result in short-, intermediate-, and long-term outcomes. This can help evaluators understand which program components should be implemented and monitored during the study.

- Attend program training. When feasible, we recommend evaluators attend program training(s). Attending program training(s) can help evaluators learn about different program components, better understand how each component should be delivered, and become familiar with which program resources are available to support implementation (e.g., English language supports). This experience can help evaluators understand and identify when implementation is or is not going as intended.

- Align evaluation efforts to implementation guidelines. When possible, we recommend aligning evaluation efforts to program implementation guidelines. Implementation guidelines provide detailed guidance on the expected critical program features and the extent to which each component should be implemented. Along with providing guidance to those delivering programs, they can also help evaluators determine if the program is actually implemented with fidelity to the guidelines.

- Use multiple measures. We recommend selecting or creating multiple measures to properly monitor and assess various aspects of program implementation. For example, we use a variety of measures to monitor program implementation based on the study’s goals and budget, such as implementation logs, classroom observations, surveys, interviews, and program usage data. The use of multiple measures decreases bias and allows for response validation through triangulation (i.e., cross-verification from two or more sources), which helps ensure an accurate assessment of program implementation.

- Keep track of response rates and missing data. We recommend tracking implementation data regularly to avoid missing data. For example, if a study uses weekly implementation logs, response rates and missing data should be monitored on a weekly basis to ensure that measures are completed in a timely manner. Complete data sets provide evaluators with important, and more valid, information regarding program implementation than those with missing data points.